The Chinese room argument holds that a digital computer executing a

program cannot have a "

mind," "

understanding

Understanding is a psychological process related to an abstract or physical object, such as a person, situation, or message whereby one is able to use concepts to model that object.

Understanding is a relation between the knower and an object ...

" or "

consciousness

Consciousness, at its simplest, is sentience and awareness of internal and external existence. However, the lack of definitions has led to millennia of analyses, explanations and debates by philosophers, theologians, linguisticians, and scien ...

," regardless of how intelligently or human-like the program may make the computer behave. The argument was presented by

philosopher John Searle in his paper, "Minds, Brains, and Programs", published in ''

Behavioral and Brain Sciences

''Behavioral and Brain Sciences'' is a bimonthly peer-reviewed scientific journal of Open Peer Commentary established in 1978 by Stevan Harnad and published by Cambridge University Press. It is modeled on the journal ''Current Anthropology'' (whic ...

'' in 1980. Similar arguments were presented by

Gottfried Leibniz

Gottfried Wilhelm (von) Leibniz . ( – 14 November 1716) was a German polymath active as a mathematician, philosopher, scientist and diplomat. He is one of the most prominent figures in both the history of philosophy and the history of mathem ...

(1714),

Anatoly Dneprov (1961), Lawrence Davis (1974) and

Ned Block (1978). Searle's version has been widely discussed in the years since. The centerpiece of Searle's argument is a

thought experiment

A thought experiment is a hypothetical situation in which a hypothesis, theory, or principle is laid out for the purpose of thinking through its consequences.

History

The ancient Greek ''deiknymi'' (), or thought experiment, "was the most anc ...

known as the ''Chinese room''.

The argument is directed against the

philosophical positions of

functionalism and

computationalism

In philosophy of mind, the computational theory of mind (CTM), also known as computationalism, is a family of views that hold that the human mind is an information processing system and that cognition and consciousness together are a form of c ...

, which hold that the mind may be viewed as an information-processing system operating on formal symbols, and that simulation of a given mental state is sufficient for its presence. Specifically, the argument is intended to refute a position Searle calls strong AI: "The appropriately programmed computer with the right inputs and outputs would thereby have a mind in exactly the same sense human beings have minds."

Although it was originally presented in reaction to the statements of

artificial intelligence

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech r ...

(AI) researchers, it is not an argument against the goals of mainstream AI research because it does not show a limit in the amount of "intelligent" behavior a machine can display. The argument applies only to

digital computer

A computer is a machine that can be programmed to carry out sequences of arithmetic or logical operations (computation) automatically. Modern digital electronic computers can perform generic sets of operations known as programs. These program ...

s running programs and does not apply to machines in general.

Chinese room thought experiment

Searle's

thought experiment

A thought experiment is a hypothetical situation in which a hypothesis, theory, or principle is laid out for the purpose of thinking through its consequences.

History

The ancient Greek ''deiknymi'' (), or thought experiment, "was the most anc ...

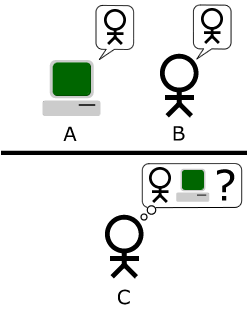

begins with this hypothetical premise: suppose that

artificial intelligence

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech r ...

research has succeeded in constructing a computer that behaves as if it understands

Chinese

Chinese can refer to:

* Something related to China

* Chinese people, people of Chinese nationality, citizenship, and/or ethnicity

**''Zhonghua minzu'', the supra-ethnic concept of the Chinese nation

** List of ethnic groups in China, people of ...

. It takes

Chinese character

Chinese characters () are logograms developed for the writing of Chinese. In addition, they have been adapted to write other East Asian languages, and remain a key component of the Japanese writing system where they are known as ''kanj ...

s as input and, by following the instructions of a

computer program

A computer program is a sequence or set of instructions in a programming language for a computer to execute. Computer programs are one component of software, which also includes documentation and other intangible components.

A computer program ...

, produces other Chinese characters, which it presents as output. Suppose, says Searle, that this computer performs its task so convincingly that it comfortably passes the

Turing test

The Turing test, originally called the imitation game by Alan Turing in 1950, is a test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human. Turing proposed that a human evaluato ...

: it convinces a human Chinese speaker that the program is itself a live Chinese speaker. To all of the questions that the person asks, it makes appropriate responses, such that any Chinese speaker would be convinced that they are talking to another Chinese-speaking human being.

The question Searle wants to answer is this: does the machine ''literally'' "understand" Chinese? Or is it merely ''simulating'' the ability to understand Chinese? Searle calls the first position "strong AI" and the latter "weak AI".

Searle then supposes that he is in a closed room and has a book with an English version of the computer program, along with sufficient papers, pencils, erasers, and filing cabinets. Searle could receive Chinese characters through a slot in the door, process them according to the program's instructions, and produce Chinese characters as output, without understanding any of the content of the Chinese writing. If the computer had passed the Turing test this way, it follows, says Searle, that he would do so as well, simply by running the program manually.

Searle asserts that there is no essential difference between the roles of the computer and himself in the experiment. Each simply follows a program, step-by-step, producing behavior that is then interpreted by the user as demonstrating intelligent conversation. However, Searle himself would not be able to understand the conversation. ("I don't speak a word of Chinese," he points out.) Therefore, he argues, it follows that the computer would not be able to understand the conversation either.

Searle argues that, without "understanding" (or "

intentionality"), we cannot describe what the machine is doing as "thinking" and, since it does not think, it does not have a "mind" in anything like the normal sense of the word. Therefore, he concludes that the "strong AI" hypothesis is false.

History

Gottfried Leibniz

Gottfried Wilhelm (von) Leibniz . ( – 14 November 1716) was a German polymath active as a mathematician, philosopher, scientist and diplomat. He is one of the most prominent figures in both the history of philosophy and the history of mathem ...

made a similar argument in 1714 against

mechanism

Mechanism may refer to:

* Mechanism (engineering), rigid bodies connected by joints in order to accomplish a desired force and/or motion transmission

*Mechanism (biology), explaining how a feature is created

*Mechanism (philosophy), a theory that ...

(the position that the mind is a machine and nothing more). Leibniz used the thought experiment of expanding the brain until it was the size of a

mill

Mill may refer to:

Science and technology

*

* Mill (grinding)

* Milling (machining)

* Millwork

* Textile mill

* Steel mill, a factory for the manufacture of steel

* List of types of mill

* Mill, the arithmetic unit of the Analytical Engine early ...

. Leibniz found it difficult to imagine that a "mind" capable of "perception" could be constructed using only mechanical processes.

Soviet cyberneticist

Anatoly Dneprov made an essentially identical argument in 1961, in the form of the short story "

The Game". In it, a stadium of people act as switches and memory cells implementing a program to translate a sentence of Portuguese, a language that none of them knows. The game was organized by a "Professor Zarubin" to answer the question "Can mathematical machines think?" Speaking through Zarubin, Dneprov writes "the only way to prove that machines can think is to turn yourself into a machine and examine your thinking process." and he concludes, as Searle does, "We’ve proven that even the most perfect simulation of machine thinking is not the thinking process itself."

In 1974,

Lawrence Davis imagined duplicating the brain using telephone lines and offices staffed by people, and in 1978

Ned Block envisioned the entire population of China involved in such a brain simulation. This thought experiment is called the

China brain, also the "Chinese Nation" or the "Chinese Gym".

Searle's version appeared in his 1980 paper "Minds, Brains, and Programs", published in ''

Behavioral and Brain Sciences

''Behavioral and Brain Sciences'' is a bimonthly peer-reviewed scientific journal of Open Peer Commentary established in 1978 by Stevan Harnad and published by Cambridge University Press. It is modeled on the journal ''Current Anthropology'' (whic ...

''. It eventually became the journal's "most influential target article", generating an enormous number of commentaries and responses in the ensuing decades, and Searle has continued to defend and refine the argument in many papers, popular articles and books. David Cole writes that "the Chinese Room argument has probably been the most widely discussed philosophical argument in cognitive science to appear in the past 25 years".

Most of the discussion consists of attempts to refute it. "The overwhelming majority", notes ''

BBS

BBS may refer to:

Ammunition

* BBs, BB gun metal bullets

* BBs, airsoft gun plastic pellets

Computing and gaming

* Bulletin board system, a computer server users dial into via dial-up or telnet; precursor to the Internet

* BIOS Boot Specificat ...

'' editor

Stevan Harnad, "still think that the Chinese Room Argument is dead wrong". The sheer volume of the literature that has grown up around it inspired

Pat Hayes

Patrick John Hayes AAAI Fellow, FAAAI (born 21 August 1944) is a British computer scientist who lives and works in the United States. , he is a Senior Research Scientist at the IHMC, Institute for Human and Machine Cognition in Pensacola, Flori ...

to comment that the field of

cognitive science ought to be redefined as "the ongoing research program of showing Searle's Chinese Room Argument to be false".

Searle's argument has become "something of a classic in cognitive science", according to Harnad.

Varol Akman

Varol Akman (born 8 June 1957, Antalya, Turkey) is Professor of Computer Engineering in Bilkent University, Ankara.

An academic of engineering background, Akman obtained his B.A in Electrical Engineering from the Middle East Technical Universit ...

agrees, and has described the original paper as "an exemplar of philosophical clarity and purity".

Philosophy

Although the Chinese Room argument was originally presented in reaction to the statements of

artificial intelligence

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech r ...

researchers, philosophers have come to consider it as an important part of the

philosophy of mind

Philosophy of mind is a branch of philosophy that studies the ontology and nature of the mind and its relationship with the body. The mind–body problem is a paradigmatic issue in philosophy of mind, although a number of other issues are add ...

. It is a challenge to

functionalism and the

computational theory of mind

In philosophy of mind, the computational theory of mind (CTM), also known as computationalism, is a family of views that hold that the human mind is an information processing system and that cognition and consciousness together are a form of com ...

, and is related to such questions as the

mind–body problem

The mind–body problem is a philosophical debate concerning the relationship between thought and consciousness in the human mind, and the brain as part of the physical body. The debate goes beyond addressing the mere question of how mind and bo ...

, the

problem of other minds

The problem of other minds is a philosophical problem traditionally stated as the following epistemological question: Given that I can only observe the behavior of others, how can I know that others have minds? The problem is that knowledge of ot ...

, the

symbol-grounding problem, and the

hard problem of consciousness.

Strong AI

Searle identified a

philosophical position he calls "strong AI":

The appropriately programmed computer with the right inputs and outputs would thereby have a mind in exactly the same sense human beings have minds.

The definition depends on the distinction between ''simulating'' a mind and ''actually having'' a mind. Searle writes that "according to Strong AI, the correct simulation really is a mind. According to Weak AI, the correct simulation is a model of the mind."

The claim is implicit in some of the statements of early AI researchers and analysts. For example, in 1955, AI founder

Herbert A. Simon

Herbert Alexander Simon (June 15, 1916 – February 9, 2001) was an American political scientist, with a Ph.D. in political science, whose work also influenced the fields of computer science, economics, and cognitive psychology. His primary ...

declared that "there are now in the world machines that think, that learn and create". Simon, together with

Allen Newell and

Cliff Shaw

John Clifford Shaw (February 23, 1922 – February 9, 1991) was a systems programmer at the RAND Corporation. He is a coauthor of the first artificial intelligence program, the Logic Theorist, and was one of the developers of General Problem Solve ...

, after having completed the first "AI" program, the

Logic Theorist

Logic Theorist is a computer program written in 1956 by Allen Newell, Herbert A. Simon, and Cliff Shaw.

, and It was the first program deliberately engineered to perform automated reasoning and is called "the first artificial intelligence prog ...

, claimed that they had "solved the venerable

mind–body problem

The mind–body problem is a philosophical debate concerning the relationship between thought and consciousness in the human mind, and the brain as part of the physical body. The debate goes beyond addressing the mere question of how mind and bo ...

, explaining how a system composed of matter can have the properties of

mind."

John Haugeland

John Haugeland (; March 13, 1945 – June 23, 2010) was a professor of philosophy, specializing in the philosophy of mind, cognitive science, phenomenology, and Heidegger. He spent most of his career at the University of Pittsburgh, followed ...

wrote that "AI wants only the genuine article: ''machines with minds'', in the full and literal sense. This is not science fiction, but real science, based on a theoretical conception as deep as it is daring: namely, we are, at root, ''computers ourselves''."

Searle also ascribes the following claims to advocates of strong AI:

* AI systems can be used to explain the mind;

* The study of the brain is irrelevant to the study of the mind; and

* The

Turing test

The Turing test, originally called the imitation game by Alan Turing in 1950, is a test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human. Turing proposed that a human evaluato ...

is adequate for establishing the existence of mental states.

Strong AI as computationalism or functionalism

In more recent presentations of the Chinese room argument, Searle has identified "strong AI" as "computer

functionalism" (a term he attributes to

Daniel Dennett). Functionalism is a position in modern

philosophy of mind

Philosophy of mind is a branch of philosophy that studies the ontology and nature of the mind and its relationship with the body. The mind–body problem is a paradigmatic issue in philosophy of mind, although a number of other issues are add ...

that holds that we can define mental phenomena (such as beliefs, desires, and perceptions) by describing their functions in relation to each other and to the outside world. Because a computer program can accurately represent functional relationships as relationships between symbols, a computer can have mental phenomena if it runs the right program, according to functionalism.

Stevan Harnad argues that Searle's depictions of strong AI can be reformulated as "recognizable tenets of ''computationalism'', a position (unlike "strong AI") that is actually held by many thinkers, and hence one worth refuting."

Computationalism

In philosophy of mind, the computational theory of mind (CTM), also known as computationalism, is a family of views that hold that the human mind is an information processing system and that cognition and consciousness together are a form of c ...

is the position in the philosophy of mind which argues that the

mind can be accurately described as an

information-processing system.

Each of the following, according to Harnad, is a "tenet" of computationalism:

* Mental states are computational states (which is why computers can have mental states and help to explain the mind);

* Computational states are

implementation-independent—in other words, it is the software that determines the computational state, not the hardware (which is why the brain, being hardware, is irrelevant); and that

* Since implementation is unimportant, the only empirical data that matters is how the system functions; hence the

Turing test

The Turing test, originally called the imitation game by Alan Turing in 1950, is a test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human. Turing proposed that a human evaluato ...

is definitive.

Strong AI vs. biological naturalism

Searle holds a philosophical position he calls "

biological naturalism

Biological naturalism is a theory about, among other things, the relationship between consciousness and body (i.e. brain), and hence an approach to the mind–body problem. It was first proposed by the philosopher John Searle in 1980 and is def ...

": that

consciousness

Consciousness, at its simplest, is sentience and awareness of internal and external existence. However, the lack of definitions has led to millennia of analyses, explanations and debates by philosophers, theologians, linguisticians, and scien ...

and

understanding

Understanding is a psychological process related to an abstract or physical object, such as a person, situation, or message whereby one is able to use concepts to model that object.

Understanding is a relation between the knower and an object ...

require specific biological machinery that are found in brains. He writes "brains cause minds" and that "actual human mental phenomena

redependent on actual physical–chemical properties of actual human brains". Searle argues that this machinery (known to

neuroscience

Neuroscience is the scientific study of the nervous system (the brain, spinal cord, and peripheral nervous system), its functions and disorders. It is a multidisciplinary science that combines physiology, anatomy, molecular biology, developme ...

as the "

neural correlates of consciousness

The neural correlates of consciousness (NCC) refer to the relationships between mental states and neural states and constitute the minimal set of neuronal events and mechanisms sufficient for a specific conscious percept. Neuroscientists use emp ...

") must have some causal powers that permit the human experience of consciousness. Searle's belief in the existence of these powers has been criticized.

Searle does not disagree with the notion that machines can have consciousness and understanding, because, as he writes, "we are precisely such machines". Searle holds that the brain is, in fact, a machine, but that the brain gives rise to consciousness and understanding using machinery that is non-computational. If neuroscience is able to isolate the mechanical process that gives rise to consciousness, then Searle grants that it may be possible to create machines that have consciousness and understanding. However, without the specific machinery required, Searle does not believe that consciousness can occur.

Biological naturalism implies that one cannot determine if the experience of consciousness is occurring merely by examining how a system functions, because the specific machinery of the brain is essential. Thus, biological naturalism is directly opposed to both

behaviorism and

functionalism (including "computer functionalism" or "strong AI"). Biological naturalism is similar to

identity theory (the position that mental states are "identical to" or "composed of" neurological events); however, Searle has specific technical objections to identity theory. Searle's biological naturalism and strong AI are both opposed to

Cartesian dualism Cartesian means of or relating to the French philosopher René Descartes—from his Latinized name ''Cartesius''. It may refer to:

Mathematics

* Cartesian closed category, a closed category in category theory

*Cartesian coordinate system, moder ...

, the classical idea that the brain and mind are made of different "substances". Indeed, Searle accuses strong AI of dualism, writing that "strong AI only makes sense given the dualistic assumption that, where the mind is concerned, the brain doesn't matter."

Consciousness

Searle's original presentation emphasized "understanding"—that is, mental states with what philosophers call "

intentionality"—and did not directly address other closely related ideas such as "consciousness". However, in more recent presentations Searle has included consciousness as the real target of the argument.

David Chalmers

David John Chalmers (; born 20 April 1966) is an Australian philosopher and cognitive scientist specializing in the areas of philosophy of mind and philosophy of language. He is a professor of philosophy and neural science at New York Univers ...

writes "it is fairly clear that consciousness is at the root of the matter" of the Chinese room.

Colin McGinn

Colin McGinn (born 10 March 1950) is a British philosopher. He has held teaching posts and professorships at University College London, the University of Oxford, Rutgers University, and the University of Miami.

McGinn is best known for his work ...

argues that the Chinese room provides strong evidence that the

hard problem of consciousness is fundamentally insoluble. The argument, to be clear, is not about whether a machine can be conscious, but about whether it (or anything else for that matter) can be shown to be conscious. It is plain that any other method of probing the occupant of a Chinese room has the same difficulties in principle as exchanging questions and answers in Chinese. It is simply not possible to divine whether a conscious agency or some clever simulation inhabits the room.

Searle argues that this is only true for an observer ''outside'' of the room. The whole point of the thought experiment is to put someone ''inside'' the room, where they can directly observe the operations of consciousness. Searle claims that from his vantage point within the room there is nothing he can see that could imaginably give rise to consciousness, other than himself, and clearly he does not have a mind that can speak Chinese.

Applied ethics

Patrick Hew used the Chinese Room argument to deduce requirements from military

command and control

Command and control (abbr. C2) is a "set of organizational and technical attributes and processes ... hatemploys human, physical, and information resources to solve problems and accomplish missions" to achieve the goals of an organization or en ...

systems if they are to preserve a commander's

moral agency. He drew an analogy between a commander in their

command center

A command center (often called a war room) is any place that is used to provide centralized command for some purpose.

While frequently considered to be a military facility, these can be used in many other cases by governments or businesses ...

and the person in the Chinese Room, and analyzed it under a reading of

Aristotle’s notions of "compulsory" and "ignorance". Information could be "down converted" from meaning to symbols, and manipulated symbolically, but moral agency could be undermined if there was inadequate 'up conversion' into meaning. Hew cited examples from the

USS ''Vincennes'' incident.

Computer science

The Chinese room argument is primarily an argument in the

philosophy of mind

Philosophy of mind is a branch of philosophy that studies the ontology and nature of the mind and its relationship with the body. The mind–body problem is a paradigmatic issue in philosophy of mind, although a number of other issues are add ...

, and both major computer scientists and artificial intelligence researchers consider it irrelevant to their fields. However, several concepts developed by computer scientists are essential to understanding the argument, including

symbol processing,

Turing machine

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algori ...

s,

Turing completeness

In computability theory, a system of data-manipulation rules (such as a computer's instruction set, a programming language, or a cellular automaton) is said to be Turing-complete or computationally universal if it can be used to simulate any Tu ...

, and the

Turing test

The Turing test, originally called the imitation game by Alan Turing in 1950, is a test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human. Turing proposed that a human evaluato ...

.

Strong AI vs. AI research

Searle's arguments are not usually considered an issue for AI research.

Stuart Russell and

Peter Norvig

Peter Norvig (born December 14, 1956) is an American computer scientist and Distinguished Education Fellow at the Stanford Institute for Human-Centered AI. He previously served as a director of research and search quality at Google. Norvig is t ...

observe that most AI researchers "don't care about the strong AI hypothesis—as long as the program works, they don't care whether you call it a simulation of intelligence or real intelligence." The primary mission of

artificial intelligence

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech r ...

research is only to create useful systems that ''act'' intelligently, and it does not matter if the intelligence is "merely" a simulation.

Searle does not disagree that AI research can create machines that are capable of highly intelligent behavior. The Chinese room argument leaves open the possibility that a digital machine could be built that ''acts'' more intelligently than a person, but does not have a

mind or

intentionality in the same way that

brains do.

Searle's "strong AI" should not be confused with "

strong AI" as defined by

Ray Kurzweil

Raymond Kurzweil ( ; born February 12, 1948) is an American computer scientist, author, inventor, and futurist. He is involved in fields such as optical character recognition (OCR), text-to-speech synthesis, speech recognition technology, and e ...

and other futurists, who use the term to describe machine intelligence that rivals or exceeds human intelligence. Kurzweil is concerned primarily with the ''amount'' of intelligence displayed by the machine, whereas Searle's argument sets no limit on this. Searle argues that even a

superintelligent machine would not necessarily have a mind and consciousness.

Turing test

The Chinese room implements a version of the

Turing test

The Turing test, originally called the imitation game by Alan Turing in 1950, is a test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human. Turing proposed that a human evaluato ...

.

Alan Turing

Alan Mathison Turing (; 23 June 1912 – 7 June 1954) was an English mathematician, computer scientist, logician, cryptanalyst, philosopher, and theoretical biologist. Turing was highly influential in the development of theoretical co ...

introduced the test in 1950 to help answer the question "can machines think?" In the standard version, a human judge engages in a natural language conversation with a human and a machine designed to generate performance indistinguishable from that of a human being. All participants are separated from one another. If the judge cannot reliably tell the machine from the human, the machine is said to have passed the test.

Turing then considered each possible objection to the proposal "machines can think", and found that there are simple, obvious answers if the question is de-mystified in this way. He did not, however, intend for the test to measure for the presence of "consciousness" or "understanding". He did not believe this was relevant to the issues that he was addressing. He wrote:

To Searle, as a philosopher investigating in the nature of

mind and

consciousness

Consciousness, at its simplest, is sentience and awareness of internal and external existence. However, the lack of definitions has led to millennia of analyses, explanations and debates by philosophers, theologians, linguisticians, and scien ...

, these are the relevant mysteries. The Chinese room is designed to show that the Turing test is insufficient to detect the presence of consciousness, even if the room can

behave or

function

Function or functionality may refer to:

Computing

* Function key, a type of key on computer keyboards

* Function model, a structured representation of processes in a system

* Function object or functor or functionoid, a concept of object-oriente ...

as a conscious mind would.

Symbol processing

The Chinese room (and all modern computers) manipulate physical objects in order to carry out calculations and do simulations. AI researchers

Allen Newell and

Herbert A. Simon

Herbert Alexander Simon (June 15, 1916 – February 9, 2001) was an American political scientist, with a Ph.D. in political science, whose work also influenced the fields of computer science, economics, and cognitive psychology. His primary ...

called this kind of machine a

physical symbol system

A physical symbol system (also called a formal system) takes physical patterns (symbols), combining them into structures (expressions) and manipulating them (using processes) to produce new expressions.

The physical symbol system hypothesis (PSSH ...

. It is also equivalent to the

formal system

A formal system is an abstract structure used for inferring theorems from axioms according to a set of rules. These rules, which are used for carrying out the inference of theorems from axioms, are the logical calculus of the formal system.

A form ...

s used in the field of

mathematical logic

Mathematical logic is the study of formal logic within mathematics. Major subareas include model theory, proof theory, set theory, and recursion theory. Research in mathematical logic commonly addresses the mathematical properties of formal ...

.

Searle emphasizes the fact that this kind of symbol manipulation is

syntactic (borrowing a term from the study of

grammar

In linguistics, the grammar of a natural language is its set of structural constraints on speakers' or writers' composition of clauses, phrases, and words. The term can also refer to the study of such constraints, a field that includes domain ...

). The computer manipulates the symbols using a form of

syntax rules, without any knowledge of the symbol's

semantics

Semantics (from grc, σημαντικός ''sēmantikós'', "significant") is the study of reference, meaning, or truth. The term can be used to refer to subfields of several distinct disciplines, including philosophy, linguistics and comp ...

(that is, their

meaning).

Newell and Simon had conjectured that a physical symbol system (such as a digital computer) had all the necessary machinery for "general intelligent action", or, as it is known today,

artificial general intelligence

Artificial general intelligence (AGI) is the ability of an intelligent agent to understand or learn any intellectual task that a human being can.

It is a primary goal of some artificial intelligence research and a common topic in science fictio ...

. They framed this as a philosophical position, the

physical symbol system hypothesis: "A physical symbol system has the

necessary and sufficient means for general intelligent action." The Chinese room argument does not refute this, because it is framed in terms of "intelligent action", i.e. the external behavior of the machine, rather than the presence or absence of understanding, consciousness and mind.

Chinese room and Turing completeness

The Chinese room has a design analogous to that of a modern computer. It has a

Von Neumann architecture

The von Neumann architecture — also known as the von Neumann model or Princeton architecture — is a computer architecture based on a 1945 description by John von Neumann, and by others, in the '' First Draft of a Report on the EDVAC''. T ...

, which consists of a program (the book of instructions), some memory (the papers and file cabinets), a

CPU that follows the instructions (the man), and a means to write symbols in memory (the pencil and eraser). A machine with this design is known in

theoretical computer science

computer science (TCS) is a subset of general computer science and mathematics that focuses on mathematical aspects of computer science such as the theory of computation, lambda calculus, and type theory.

It is difficult to circumscribe the ...

as "

Turing complete

Alan Mathison Turing (; 23 June 1912 – 7 June 1954) was an English mathematician, computer scientist, logician, cryptanalyst, philosopher, and theoretical biologist. Turing was highly influential in the development of theoretical co ...

", because it has the necessary machinery to carry out any computation that a

Turing machine

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algori ...

can do, and therefore it is capable of doing a step-by-step simulation of any other digital machine, given enough memory and time.

Alan Turing

Alan Mathison Turing (; 23 June 1912 – 7 June 1954) was an English mathematician, computer scientist, logician, cryptanalyst, philosopher, and theoretical biologist. Turing was highly influential in the development of theoretical co ...

writes, "all digital computers are in a sense equivalent." The widely accepted

Church–Turing thesis holds that any function computable by an effective procedure is computable by a Turing machine.

The Turing completeness of the Chinese room implies that it can do whatever any other digital computer can do (albeit much, much more slowly). Thus, if the Chinese room does not or can not contain a Chinese-speaking mind, then no other digital computer can contain a mind. Some replies to Searle begin by arguing that the room, as described, cannot have a Chinese-speaking mind. Arguments of this form, according to

Stevan Harnad, are "no refutation (but rather an affirmation)" of the Chinese room argument, because these arguments actually imply that ''no'' digital computers can have a mind.

There are some critics, such as Hanoch Ben-Yami, who argue that the Chinese room cannot simulate all the abilities of a digital computer, such as being able to determine the current time.

Complete argument

Searle has produced a more formal version of the argument of which the Chinese Room forms a part. He presented the first version in 1984. The version given below is from 1990. The Chinese room thought experiment is intended to prove point A3.

He begins with three axioms:

:(A1) "Programs are formal (

syntactic)."

::A program uses

syntax to manipulate symbols and pays no attention to the

semantics

Semantics (from grc, σημαντικός ''sēmantikós'', "significant") is the study of reference, meaning, or truth. The term can be used to refer to subfields of several distinct disciplines, including philosophy, linguistics and comp ...

of the symbols. It knows where to put the symbols and how to move them around, but it does not know what they stand for or what they mean. For the program, the symbols are just physical objects like any others.

:(A2) "Minds have mental contents (

semantics

Semantics (from grc, σημαντικός ''sēmantikós'', "significant") is the study of reference, meaning, or truth. The term can be used to refer to subfields of several distinct disciplines, including philosophy, linguistics and comp ...

)."

::Unlike the symbols used by a program, our thoughts have meaning: they represent things and we know what it is they represent.

:(A3) "Syntax by itself is neither constitutive of nor sufficient for semantics."

::This is what the Chinese room thought experiment is intended to prove: the Chinese room has syntax (because there is a man in there moving symbols around). The Chinese room has no semantics (because, according to Searle, there is no one or nothing in the room that understands what the symbols mean). Therefore, having syntax is not enough to generate semantics.

Searle posits that these lead directly to this conclusion:

:(C1) Programs are neither constitutive of nor sufficient for minds.

::This should follow without controversy from the first three: Programs don't have semantics. Programs have only syntax, and syntax is insufficient for semantics. Every mind has semantics. Therefore no programs are minds.

This much of the argument is intended to show that

artificial intelligence

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech r ...

can never produce a machine with a mind by writing programs that manipulate symbols. The remainder of the argument addresses a different issue. Is the human brain running a program? In other words, is the

computational theory of mind

In philosophy of mind, the computational theory of mind (CTM), also known as computationalism, is a family of views that hold that the human mind is an information processing system and that cognition and consciousness together are a form of com ...

correct? He begins with an axiom that is intended to express the basic modern scientific consensus about brains and minds:

:(A4) Brains cause minds.

Searle claims that we can derive "immediately" and "trivially" that:

:(C2) Any other system capable of causing minds would have to have causal powers (at least) equivalent to those of brains.

::Brains must have something that causes a mind to exist. Science has yet to determine exactly what it is, but it must exist, because minds exist. Searle calls it "causal powers". "Causal powers" is whatever the brain uses to create a mind. If anything else can cause a mind to exist, it must have "equivalent causal powers". "Equivalent causal powers" is whatever ''else'' that could be used to make a mind.

And from this he derives the further conclusions:

:(C3) Any artifact that produced mental phenomena, any artificial brain, would have to be able to duplicate the specific causal powers of brains, and it could not do that just by running a formal program.

::This

follows from C1 and C2: Since no program can produce a mind, and "equivalent causal powers" produce minds, it follows that programs do not have "equivalent causal powers."

:(C4) The way that human brains actually produce mental phenomena cannot be solely by virtue of running a computer program.

::Since programs do not have "equivalent causal powers", "equivalent causal powers" produce minds, and brains produce minds, it follows that brains do not use programs to produce minds.

Refutations of Searle's argument take many different forms (see below). Computationalists and functionalists reject A3, arguing that "syntax" (as Searle describes it) ''can'' have "semantics" if the syntax has the right functional structure. Eliminative materialists reject A2, arguing that minds don't actually have "semantics" -- that thoughts and other mental phenomena are inherently meaningless but nevertheless function as if they had meaning.

Replies

Replies to Searle's argument may be classified according to what they claim to show:

* Those which identify ''who'' speaks Chinese

* Those which demonstrate how meaningless symbols can become meaningful

* Those which suggest that the Chinese room should be redesigned in some way

* Those which contend that Searle's argument is misleading

* Those which argue that the argument makes false assumptions about subjective conscious experience and therefore proves nothing

Some of the arguments (robot and brain simulation, for example) fall into multiple categories.

Systems and virtual mind replies: finding the mind

These replies attempt to answer the question: since the man in the room doesn't speak Chinese, ''where'' is the "mind" that does? These replies address the key

ontological

In metaphysics, ontology is the philosophical study of being, as well as related concepts such as existence, becoming, and reality.

Ontology addresses questions like how entities are grouped into categories and which of these entities exi ...

issues of

mind vs. body and simulation vs. reality. All of the replies that identify the mind in the room are versions of "the system reply".

The basic version of the system reply argues that it is the "whole system" that understands Chinese. While the man understands only English, when he is combined with the program, scratch paper, pencils and file cabinets, they form a system that can understand Chinese. "Here, understanding is not being ascribed to the mere individual; rather it is being ascribed to this whole system of which he is a part" Searle explains. The fact that a certain man does not understand Chinese is irrelevant, because it is only the system as a whole that matters.

Searle notes that (in this simple version of the reply) the "system" is nothing more than a collection of ordinary physical objects; it grants the power of understanding and consciousness to "the conjunction of that person and bits of paper" without making any effort to explain how this pile of objects has become a conscious, thinking being. Searle argues that no reasonable person should be satisfied with the reply, unless they are "under the grip of an ideology;" In order for this reply to be remotely plausible, one must take it for granted that consciousness can be the product of an information processing "system", and does not require anything resembling the actual biology of the brain.

Searle then responds by simplifying this list of physical objects: he asks what happens if the man memorizes the rules and keeps track of everything in his head? Then the whole system consists of just one object: the man himself. Searle argues that if the man does not understand Chinese then the system does not understand Chinese either because now "the system" and "the man" both describe exactly the same object.

Critics of Searle's response argue that the program has allowed the man to have two minds in one head. If we assume a "mind" is a form of information processing, then the

theory of computation

In theoretical computer science and mathematics, the theory of computation is the branch that deals with what problems can be solved on a model of computation, using an algorithm, how algorithmic efficiency, efficiently they can be solved or t ...

can account for two computations occurring at once, namely (1) the computation for

universal programmability (which is the function instantiated by the person and note-taking materials ''independently'' from any particular program contents) and (2) the computation of the Turing machine that is described by the program (which is instantiated by everything ''including'' the specific program). The theory of computation thus formally explains the open possibility that the second computation in the Chinese Room could entail a human-equivalent semantic understanding of the Chinese inputs. The focus belongs on the program's Turing machine rather than on the person's. However, from Searle's perspective, this argument is circular. The question at issue is whether consciousness is a form of information processing, and this reply requires that we make that assumption.

More sophisticated versions of the systems reply try to identify more precisely what "the system" is and they differ in exactly how they describe it. According to these replies, the "mind that speaks Chinese" could be such things as: the "software", a "program", a "running program", a simulation of the "neural correlates of consciousness", the "functional system", a "simulated mind", an "

emergent property", or "a

virtual mind" (described below).

Marvin Minsky

Marvin Lee Minsky (August 9, 1927 – January 24, 2016) was an American cognitive and computer scientist concerned largely with research of artificial intelligence (AI), co-founder of the Massachusetts Institute of Technology's AI laboratory, ...

suggested a version of the system reply known as the "virtual mind reply". The term "

virtual" is used in computer science to describe an object that appears to exist "in" a computer (or computer network) only because software makes it appear to exist. The objects "inside" computers (including files, folders, and so on) are all "virtual", except for the computer's electronic components. Similarly,

Minsky argues, a computer may contain a "mind" that is virtual in the same sense as

virtual machine

In computing, a virtual machine (VM) is the virtualization/ emulation of a computer system. Virtual machines are based on computer architectures and provide functionality of a physical computer. Their implementations may involve specialized h ...

s,

virtual communities

A virtual community is a social network of individuals who connect through specific social media, potentially crossing geographical and political boundaries in order to pursue mutual interests or goals. Some of the most pervasive virtual communi ...

and

virtual reality

Virtual reality (VR) is a simulated experience that employs pose tracking and 3D near-eye displays to give the user an immersive feel of a virtual world. Applications of virtual reality include entertainment (particularly video games), e ...

.

To clarify the distinction between the simple systems reply given above and virtual mind reply, David Cole notes that two simulations could be running on one system at the same time: one speaking Chinese and one speaking Korean. While there is only one system, there can be multiple "virtual minds," thus the "system" cannot be the "mind".

Searle responds that such a mind is, at best, a

simulation

A simulation is the imitation of the operation of a real-world process or system over time. Simulations require the use of models; the model represents the key characteristics or behaviors of the selected system or process, whereas the s ...

, and writes: "No one supposes that computer simulations of a five-alarm fire will burn the neighborhood down or that a computer simulation of a rainstorm will leave us all drenched." Nicholas Fearn responds that, for some things, simulation is as good as the real thing. "When we call up the pocket calculator function on a desktop computer, the image of a pocket calculator appears on the screen. We don't complain that 'it isn't ''really'' a calculator', because the physical attributes of the device do not matter." The question is, is the human mind like the pocket calculator, essentially composed of information? Or is the mind like the rainstorm, something other than a computer, and not realizable in full by a computer simulation? For decades, this question of simulation has led AI researchers and philosophers to consider whether the term "

synthetic intelligence" is more appropriate than the common description of such intelligences as "artificial."

These replies provide an explanation of exactly who it is that understands Chinese. If there is something ''besides'' the man in the room that can understand Chinese, Searle cannot argue that (1) the man does not understand Chinese, therefore (2) nothing in the room understands Chinese. This, according to those who make this reply, shows that Searle's argument fails to prove that "strong AI" is false.

These replies, by themselves, do not provide any evidence that strong AI is ''true'', however. They do not show that the system (or the virtual mind) understands Chinese, other than the

hypothetical

A hypothesis (plural hypotheses) is a proposed explanation for a phenomenon. For a hypothesis to be a scientific hypothesis, the scientific method requires that one can test it. Scientists generally base scientific hypotheses on previous obser ...

premise that it passes the

Turing Test

The Turing test, originally called the imitation game by Alan Turing in 1950, is a test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human. Turing proposed that a human evaluato ...

. Searle argues that, if we are to consider Strong AI remotely plausible, the Chinese Room is an example that requires explanation, and it is difficult or impossible to explain how consciousness might "emerge" from the room or how the system would have consciousness. As Searle writes "the systems reply simply begs the question by insisting that the system must understand Chinese" and thus is dodging the question or hopelessly circular.

Robot and semantics replies: finding the meaning

As far as the person in the room is concerned, the symbols are just meaningless "squiggles." But if the Chinese room really "understands" what it is saying, then the symbols must get their meaning from somewhere. These arguments attempt to connect the symbols to the things they symbolize. These replies address Searle's concerns about

intentionality,

symbol grounding and

syntax vs.

semantics.

Robot reply

:Suppose that instead of a room, the program was placed into a robot that could wander around and interact with its environment. This would allow a "

causal

Causality (also referred to as causation, or cause and effect) is influence by which one event, process, state, or object (''a'' ''cause'') contributes to the production of another event, process, state, or object (an ''effect'') where the ca ...

connection" between the symbols and things they represent.

Hans Moravec

Hans Peter Moravec (born November 30, 1948, Kautzen, Austria) is an adjunct faculty member at the Robotics Institute of Carnegie Mellon University in Pittsburgh, USA. He is known for his work on robotics, artificial intelligence, and writings ...

comments: "If we could graft a robot to a reasoning program, we wouldn't need a person to provide the meaning anymore: it would come from the physical world."

:Searle's reply is to suppose that, unbeknownst to the individual in the Chinese room, some of the inputs came directly from a camera mounted on a robot, and some of the outputs were used to manipulate the arms and legs of the robot. Nevertheless, the person in the room is still just following the rules, and ''does not know what the symbols mean.'' Searle writes "he doesn't ''see'' what comes into the robot's eyes." (See

Mary's room

The knowledge argument (also known as Mary's room or Mary the super-scientist) is a philosophical thought experiment proposed by Frank Jackson in his article "Epiphenomenal Qualia" (1982) and extended in "What Mary Didn't Know" (1986). The experim ...

for a similar thought experiment.)

Derived meaning

: Some respond that the room, as Searle describes it, ''is'' connected to the world: through the Chinese speakers that it is "talking" to and through the programmers who designed the

knowledge base

A knowledge base (KB) is a technology used to store complex structured and unstructured information used by a computer system. The initial use of the term was in connection with expert systems, which were the first knowledge-based systems. ...

in his file cabinet. The symbols Searle manipulates ''are already meaningful'', they're just not meaningful to ''him''.

:Searle says that the symbols only have a "derived" meaning, like the meaning of words in books. The meaning of the symbols depends on the conscious understanding of the Chinese speakers and the programmers outside the room. The room, like a book, has no understanding of its own.

Commonsense knowledge / contextualist reply

:Some have argued that the meanings of the symbols would come from a vast "background" of

commonsense knowledge encoded in the program and the filing cabinets. This would provide a "

context

Context may refer to:

* Context (language use), the relevant constraints of the communicative situation that influence language use, language variation, and discourse summary

Computing

* Context (computing), the virtual environment required to su ...

" that would give the symbols their meaning.

:Searle agrees that this background exists, but he does not agree that it can be built into programs.

Hubert Dreyfus

Hubert Lederer Dreyfus (; October 15, 1929 – April 22, 2017) was an American philosopher and professor of philosophy at the University of California, Berkeley. His main interests included phenomenology, existentialism and the philosophy of bo ...

has also criticized the idea that the "background" can be represented symbolically.

To each of these suggestions, Searle's response is the same: no matter how much knowledge is written into the program and no matter how the program is connected to the world, he is still in the room manipulating symbols according to rules. His actions are

syntactic and this can never explain to him what the symbols stand for. Searle writes "syntax is insufficient for semantics."

However, for those who accept that Searle's actions simulate a mind, separate from his own, the important question is not what the symbols mean ''to Searle'', what is important is what they mean ''to the virtual mind.'' While Searle is trapped in the room, the virtual mind is not: it is connected to the outside world through the Chinese speakers it speaks to, through the programmers who gave it world knowledge, and through the cameras and other sensors that

roboticists can supply.

Brain simulation and connectionist replies: redesigning the room

These arguments are all versions of the systems reply that identify a particular ''kind'' of system as being important; they identify some special technology that would create conscious understanding in a machine. (The "robot" and "commonsense knowledge" replies above also specify a certain kind of system as being important.)

Brain simulator reply

:Suppose that the program simulated in fine detail the action of every neuron in the brain of a Chinese speaker. This strengthens the intuition that there would be no significant difference between the operation of the program and the operation of a live human brain.

:Searle replies that such a simulation does not reproduce the important features of the brain—its causal and intentional states.

Searle is adamant that "human mental phenomena

redependent on actual physical–chemical properties of actual human brains." Moreover, he argues:

Two variations on the brain simulator reply are the

China brain and the brain-replacement scenario.

=China brain

=

:What if we ask each citizen of China to simulate one neuron, using the telephone system to simulate the connections between

axon

An axon (from Greek ἄξων ''áxōn'', axis), or nerve fiber (or nerve fibre: see spelling differences), is a long, slender projection of a nerve cell, or neuron, in vertebrates, that typically conducts electrical impulses known as action p ...

s and

dendrite

Dendrites (from Greek δένδρον ''déndron'', "tree"), also dendrons, are branched protoplasmic extensions of a nerve cell that propagate the electrochemical stimulation received from other neural cells to the cell body, or soma, of the ...

s? In this version, it seems obvious that no individual would have any understanding of what the brain might be saying. It is also obvious that this system would be functionally equivalent to a brain, so if consciousness is a function, this system would be conscious.

=Brain replacement scenario

=

:In this, we are asked to imagine that engineers have invented a tiny computer that simulates the action of an individual neuron. What would happen if we replaced one neuron at a time? Replacing one would clearly do nothing to change conscious awareness. Replacing all of them would create a digital computer that simulates a brain. If Searle is right, then conscious awareness must disappear during the procedure (either gradually or all at once). Searle's critics argue that there would be no point during the procedure when he can claim that conscious awareness ends and mindless simulation begins. (See

Ship of Theseus

The Ship of Theseus is a thought experiment about whether an object that has had all of its original components replaced remains the same object. According to legend, Theseus, the mythical Greek founder-king of Athens, had rescued the children ...

for a similar thought experiment.)

Connectionist replies

:Closely related to the brain simulator reply, this claims that a massively parallel connectionist architecture would be capable of understanding.

Combination reply

:This response combines the robot reply with the brain simulation reply, arguing that a brain simulation connected to the world through a robot body could have a mind.

Many mansions / wait till next year reply

:Better technology in the future will allow computers to understand. Searle agrees that this is possible, but considers this point irrelevant. Searle agrees that there may be designs that would cause a machine to have conscious understanding.

These arguments (and the robot or commonsense knowledge replies) identify some special technology that would help create conscious understanding in a machine. They may be interpreted in two ways: either they claim (1) this technology is required for consciousness, the Chinese room does not or cannot implement this technology, and therefore the Chinese room cannot pass the Turing test or (even if it did) it would not have conscious understanding. Or they may be claiming that (2) it is easier to see that the Chinese room has a mind if we visualize this technology as being used to create it.

In the first case, where features like a robot body or a connectionist architecture are required, Searle claims that strong AI (as he understands it) has been abandoned. The Chinese room has all the elements of a Turing complete machine, and thus is capable of simulating any digital computation whatsoever. If Searle's room cannot pass the Turing test then there is no other digital technology that could pass the Turing test. If Searle's room ''could'' pass the Turing test, but still does not have a mind, then the Turing test is not sufficient to determine if the room has a "mind". Either way, it denies one or the other of the positions Searle thinks of as "strong AI", proving his argument.

The brain arguments in particular deny strong AI if they assume that there is no simpler way to describe the mind than to create a program that is just as mysterious as the brain was. He writes "I thought the whole idea of strong AI was that we don't need to know how the brain works to know how the mind works." If computation does not provide an ''explanation'' of the human mind, then strong AI has failed, according to Searle.

Other critics hold that the room as Searle described it does, in fact, have a mind, however they argue that it is difficult to see—Searle's description is correct, but ''misleading.'' By redesigning the room more realistically they hope to make this more obvious. In this case, these arguments are being used as appeals to intuition (see next section).

In fact, the room can just as easily be redesigned to ''weaken'' our intuitions.

Ned Block's

Blockhead argument

Blockhead is the name of a theoretical computer system invented as part of a thought experiment by philosopher Ned Block, which appeared in a paper titled "Psychologism and Behaviorism". Block did not name the computer in the paper.

Overview

In ...

suggests that the program could, in theory, be rewritten into a simple

lookup table of

rules

Rule or ruling may refer to:

Education

* Royal University of Law and Economics (RULE), a university in Cambodia

Human activity

* The exercise of political or personal control by someone with authority or power

* Business rule, a rule pert ...

of the form "if the user writes ''S'', reply with ''P'' and goto X". At least in principle, any program can be rewritten (or "

refactored") into this form, even a brain simulation. In the blockhead scenario, the entire mental state is hidden in the letter X, which represents a

memory address

In computing, a memory address is a reference to a specific memory location used at various levels by software and hardware. Memory addresses are fixed-length sequences of digits conventionally displayed and manipulated as unsigned integers. ...

—a number associated with the next rule. It is hard to visualize that an instant of one's conscious experience can be captured in a single large number, yet this is exactly what "strong AI" claims. On the other hand, such a lookup table would be ridiculously large (to the point of being physically impossible), and the states could therefore be ''extremely'' specific.

Searle argues that however the program is written or however the machine is connected to the world, the mind is being ''simulated'' by a simple step-by-step digital machine (or machines). These machines are always just like the man in the room: they understand nothing and do not speak Chinese. They are merely manipulating symbols without knowing what they mean. Searle writes: "I can have any formal program you like, but I still understand nothing."

Speed and complexity: appeals to intuition

The following arguments (and the intuitive interpretations of the arguments above) do not directly explain how a Chinese speaking mind could exist in Searle's room, or how the symbols he manipulates could become meaningful. However, by raising doubts about Searle's intuitions they support other positions, such as the system and robot replies. These arguments, if accepted, prevent Searle from claiming that his conclusion is obvious by undermining the intuitions that his certainty requires.

Several critics believe that Searle's argument relies entirely on intuitions.

Ned Block writes "Searle's argument depends for its force on intuitions that certain entities do not think."

Daniel Dennett describes the Chinese room argument as a misleading "

intuition pump

An intuition pump is a thought experiment structured to allow the thinker to use their intuition to develop an answer to a problem.

In Dennett's work

The term was coined by Daniel Dennett. In ''Consciousness Explained'', he uses the term to desc ...

" and writes "Searle's thought experiment depends, illicitly, on your imagining too simple a case, an irrelevant case, and drawing the 'obvious' conclusion from it."

Some of the arguments above also function as appeals to intuition, especially those that are intended to make it seem more plausible that the Chinese room contains a mind, which can include the robot, commonsense knowledge, brain simulation and connectionist replies. Several of the replies above also address the specific issue of complexity. The connectionist reply emphasizes that a working artificial intelligence system would have to be as complex and as interconnected as the human brain. The commonsense knowledge reply emphasizes that any program that passed a Turing test would have to be "an extraordinarily supple, sophisticated, and multilayered system, brimming with 'world knowledge' and meta-knowledge and meta-meta-knowledge", as

Daniel Dennett explains.

Many of these critiques emphasize speed and complexity of the human brain, which processes information at 100 billion operations per second (by some estimates). Several critics point out that the man in the room would probably take millions of years to respond to a simple question, and would require "filing cabinets" of astronomical proportions. This brings the clarity of Searle's intuition into doubt.

An especially vivid version of the speed and complexity reply is from

Paul

Paul may refer to:

*Paul (given name), a given name (includes a list of people with that name)

* Paul (surname), a list of people

People

Christianity

*Paul the Apostle (AD c.5–c.64/65), also known as Saul of Tarsus or Saint Paul, early Chri ...

and

Patricia Churchland

Patricia Smith Churchland (born 16 July 1943) is a Canadian-American analytic philosopher noted for her contributions to neurophilosophy and the philosophy of mind. She is UC President's Professor of Philosophy Emerita at the University of Cali ...

. They propose this analogous thought experiment: "Consider a dark room containing a man holding a bar magnet or charged object. If the man pumps the magnet up and down, then, according to

Maxwell's theory of artificial luminance (AL), it will initiate a spreading circle of

electromagnetic

In physics, electromagnetism is an interaction that occurs between particles with electric charge. It is the second-strongest of the four fundamental interactions, after the strong force, and it is the dominant force in the interactions o ...

waves and will thus be luminous. But as all of us who have toyed with magnets or charged balls well know, their forces (or any other forces for that matter), even when set in motion produce no luminance at all. It is inconceivable that you might constitute real luminance just by moving forces around!" Churchland's point is that the problem is that he would have to wave the magnet up and down something like 450 trillion times per second in order to see anything.

Stevan Harnad is critical of speed and complexity replies when they stray beyond addressing our intuitions. He writes "Some have made a cult of speed and timing, holding that, when accelerated to the right speed, the computational may make a

phase transition

In chemistry, thermodynamics, and other related fields, a phase transition (or phase change) is the physical process of transition between one state of a medium and another. Commonly the term is used to refer to changes among the basic states o ...

into the mental. It should be clear that is not a counterargument but merely an ''

ad hoc

Ad hoc is a Latin phrase meaning literally 'to this'. In English, it typically signifies a solution for a specific purpose, problem, or task rather than a generalized solution adaptable to collateral instances. (Compare with '' a priori''.)

C ...

'' speculation (as is the view that it is all just a matter of ratcheting up to the right degree of 'complexity.')"

Searle argues that his critics are also relying on intuitions, however his opponents' intuitions have no empirical basis. He writes that, in order to consider the "system reply" as remotely plausible, a person must be "under the grip of an ideology". The system reply only makes sense (to Searle) if one assumes that any "system" can have consciousness, just by virtue of being a system with the right behavior and functional parts. This assumption, he argues, is not tenable given our experience of consciousness.

Other minds and zombies: meaninglessness

Several replies argue that Searle's argument is irrelevant because his assumptions about the mind and consciousness are faulty. Searle believes that human beings directly experience their consciousness, intentionality and the nature of the mind every day, and that this experience of consciousness is not open to question. He writes that we must "presuppose the reality and knowability of the mental." The replies below question whether Searle is justified in using his own experience of consciousness to determine that it is more than mechanical symbol processing. In particular, the other minds reply argues that we cannot use our experience of consciousness to answer questions about other minds (even the mind of a computer), the eliminative materialist reply argues that Searle's own personal consciousness does not "exist" in the sense that Searle thinks it does, and the epiphenoma replies question whether we can make any argument at all about something like consciousness which can not, by definition, be detected by any experiment.

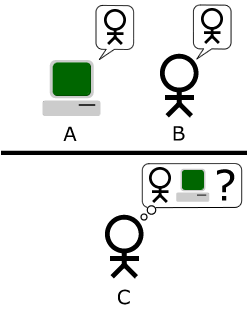

The "Other Minds Reply" points out that Searle's argument is a version of the

problem of other minds

The problem of other minds is a philosophical problem traditionally stated as the following epistemological question: Given that I can only observe the behavior of others, how can I know that others have minds? The problem is that knowledge of ot ...

, applied to machines. There is no way we can determine if other people's subjective experience is the same as our own. We can only study their behavior (i.e., by giving them our own

Turing test

The Turing test, originally called the imitation game by Alan Turing in 1950, is a test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human. Turing proposed that a human evaluato ...

). Critics of Searle argue that he is holding the Chinese room to a higher standard than we would hold an ordinary person.

Nils Nilsson writes "If a program behaves ''as if'' it were multiplying, most of us would say that it is, in fact, multiplying. For all I know, Searle may only be behaving ''as if'' he were thinking deeply about these matters. But, even though I disagree with him, his simulation is pretty good, so I'm willing to credit him with real thought."

Alan Turing

Alan Mathison Turing (; 23 June 1912 – 7 June 1954) was an English mathematician, computer scientist, logician, cryptanalyst, philosopher, and theoretical biologist. Turing was highly influential in the development of theoretical co ...

anticipated Searle's line of argument (which he called "The Argument from Consciousness") in 1950 and makes the other minds reply. He noted that people never consider the problem of other minds when dealing with each other. He writes that "instead of arguing continually over this point it is usual to have the polite convention that everyone thinks." The

Turing test

The Turing test, originally called the imitation game by Alan Turing in 1950, is a test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human. Turing proposed that a human evaluato ...

simply extends this "polite convention" to machines. He does not intend to solve the problem of other minds (for machines or people) and he does not think we need to.

Several philosophers argue that consciousness, as Searle describes it, does not exist. This position is sometimes referred to as

eliminative materialism

Eliminative materialism (also called eliminativism) is a materialist position in the philosophy of mind. It is the idea that majority of the mental states in folk psychology do not exist. Some supporters of eliminativism argue that no coherent ...

: the view that consciousness is not a concept that can "enjoy reduction" to a strictly mechanical (i.e. material) description, but rather is a concept that will be simply ''eliminated'' once the way the ''material'' brain works is fully understood, in just the same way as the concept of a demon has already been eliminated from science rather than enjoying reduction to a strictly mechanical description, and that our experience of consciousness is, as

Daniel Dennett describes it, a "

user illusion". Other mental properties, such as original

intentionality (also called “meaning”, “content”, and “semantic character”), are also commonly regarded as special properties related to beliefs and other propositional attitudes. Eliminative materialism maintains that propositional attitudes such as beliefs and desires, among other intentional mental states that have content, do not exist. If eliminative materialism is the correct scientific account of human cognition then the assumption of the Chinese room argument that "minds have mental contents (

semantics

Semantics (from grc, σημαντικός ''sēmantikós'', "significant") is the study of reference, meaning, or truth. The term can be used to refer to subfields of several distinct disciplines, including philosophy, linguistics and comp ...

)" must be rejected.

Stuart Russell and

Peter Norvig

Peter Norvig (born December 14, 1956) is an American computer scientist and Distinguished Education Fellow at the Stanford Institute for Human-Centered AI. He previously served as a director of research and search quality at Google. Norvig is t ...

argue that if we accept Searle's description of intentionality, consciousness, and the mind, we are forced to accept that consciousness is

epiphenomenal: that it "casts no shadow" i.e. is undetectable in the outside world. They argue that Searle must be mistaken about the "knowability of the mental", and in his belief that there are "causal properties" in our neurons that give rise to the mind. They point out that, by Searle's own description, these causal properties cannot be detected by anyone outside the mind, otherwise the Chinese Room could not pass the

Turing test

The Turing test, originally called the imitation game by Alan Turing in 1950, is a test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human. Turing proposed that a human evaluato ...

—the people outside would be able to tell there was not a Chinese speaker in the room by detecting their causal properties. Since they cannot detect causal properties, they cannot detect the existence of the mental. In short, Searle's "causal properties" and consciousness itself is undetectable, and anything that cannot be detected either does not exist or does not matter.

Mike Alder makes the same point, which he calls the "Newton's Flaming Laser Sword Reply". He argues that the entire argument is frivolous, because it is non-

verificationist

Verificationism, also known as the verification principle or the verifiability criterion of meaning, is the philosophical doctrine which maintains that only statements that are empirically verifiable (i.e. verifiable through the senses) are cognit ...

: not only is the distinction between ''simulating'' a mind and ''having'' a mind ill-defined, but it is also irrelevant because no experiments were, or even can be, proposed to distinguish between the two.

Daniel Dennett provides this extension to the "epiphenomena" argument. Suppose that, by some mutation, a human being is born that does not have Searle's "causal properties" but nevertheless acts exactly like a human being. (This sort of animal is called a "

zombie